🧠 Nvidia (NVDA) Q3 FY2026 Earnings — Core Brief Edition

Market Wrap 2026-03-010:00/01× Today's US Market Wrap — Key Points * Equities declined amid credit & geopolitical risks, recovering some losses. Mixed sector performance; Energy outperformed. * Treasuries rallied on geopolitical tensions & private credit concerns. Strong PPI data, but inflation fears remained contained. * Crude oil rose due to

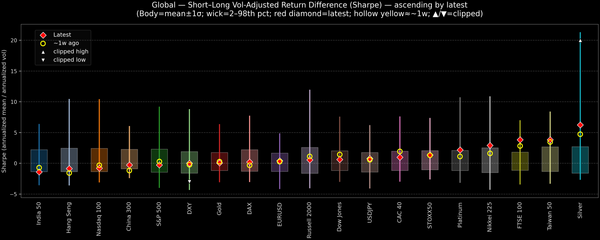

This snapshot compares short-term versus long-term performance on a risk-adjusted basis. The metric shows how much the near-term, volatility-adjusted return is deviating from the longer-run trend. Positive readings indicate short-term strength outpacing the long-term profile; negative values suggest the opposite. Assets are ordered by the latest reading; bodies show mean

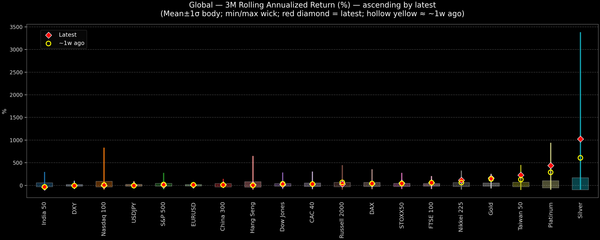

This snapshot tracks 3-month rolling annualized returns across global indices, FX, and commodities. Assets are ordered by the latest reading; bodies show mean ±1σ while wicks span historical min–max. A red diamond marks today, and a hollow yellow dot highlights roughly one week ago. Use the selector to switch

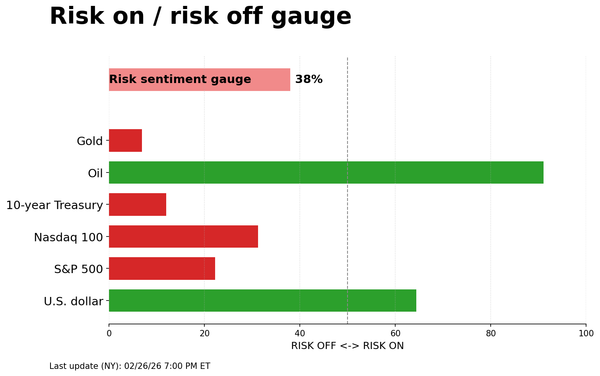

Today’s cross-asset tape skews risk-on: equity futures and oil are carrying the tone while the dollar, Treasurys and gold struggle to attract meaningful sponsorship. The gauge’s one-day read flags a market more interested in adding risk than hiding in classic hedges. The multi-year history tells the more important